In business conversations, the metaverse is quickly becoming a common topic. Due to technological development, new applications for immersive experiences, or entirely new concepts, the metaverse is among us to explore in countless scenarios. This line of thought is what meets us here today: what about presentations in the metaverse?

This SlideModel R&D articles series is our way to share the findings and initial conclusions from comprehensive research about presentations in the metaverse. For months, we experimented with different aspects of the experience of hosting presentations in the metaverse, user interaction, and the difference with our real-world presentation templates. Therefore, we invite you to discover our research path of presentations in the metaverse.

Table of Contents

- The story behind SlideModel and the Metaverse

- State-of-the-art: Potential Applications of the Metaverse in our days

- Hardware Solutions for Metaverse Meetings

- Tested software solutions for metaverse presentations

- Case Study: SlideModel hosting a meeting in the metaverse

- Translating the current real-world presentations into the metaverse

- Conclusion: Are presentations in the metaverse worth the effort these days?

The story behind SlideModel and the Metaverse

What drove us to research if presenting in the metaverse is possible?

Technology is part of SlideModel’s DNA. We are in constant pursuit of new business trends in order to deliver updated content to our audience and subscribers. Within this process, we started to get exposure to the metaverse concept and its implications. Despite being commonly labeled and scoped to Web3 technologies and crypto trading, we believe there’s an unlimited potential yet to be acknowledged when it comes to the metaverse.

Only time can tell us which shape technology will take to predict results accurately; still, for the presentation ecosystem, there is plenty of room for development, and getting immersed in the metaverse seems like a natural direction to research.

The decision to build an R&D area

When the challenge of researching the metaverse emerged, we acknowledged the lack of capabilities in our current operations team to research or deal with this technology. SlideModel opted to build a Research & Development team to stay in touch with the latest trends in technology and presentations without the current experience bias.

Led by Francisco Magnone, an Architect and specialized consultant in designing virtual and physical spaces, this SlideModel R&D team benefited from his expertise in the design industry and 3D technologies, broadening the possibilities to explore theories past our comfort zone.

How can the metaverse help presenters?

We believe the natural path to the metaverse is to conveniently reduce the expenses of hosting events like congresses, educational workshops, corporate meetings, etc., as it bridges the gap between people, regardless of their location. Not just from an avatar perspective but to actually be immersed in the event, experiencing products or services firsthand without needing to leave your home.

If we consider the common issues shown in virtual presentations, the metaverse can settle such inconveniences by reducing social anxiety, giving room to focus on the content and not tech-related hurdles. Again, these are just our hypotheses, though we believe there’s potential for companies to explore their metaverse opportunities.

Defining the metaverse: Technical concepts to know

It is not the purpose of this article to dwell on definitions of the metaverse. We perceive the current status of the metaverse as something broad, as a way of explaining the future interactions between humans and technology. Technically speaking, we should mention the following definitions to bring some context to this discussion.

XR – Extended Reality

XR stands for “extended reality,” an umbrella term that covers VR, AR, and MR. All XR tech takes the human-to-PC screen interface and modifies it, either by 1) immersing you in the virtual environment (VR), 2) adding to or augmenting the user’s surroundings (AR), or 3) both of those (MR).

VR – Virtual Reality

Computer modeling and simulation enable a person to interact with an artificial three-dimensional (3-D) visual or another sensory environment.

AR – Augmented Reality

An enhanced version of the real physical world is achieved through digital visual elements, sound, or other sensory stimuli delivered via technology. It is a growing trend among companies involved in mobile computing and business applications.

MR – Mixed Reality

A view of the real world—the physical world—with an overlay of digital elements where physical and digital elements can interact.

In popular culture, the metaverse is closely linked with VR due to the usage of headsets. Still, we believe there is more to extract from this: the relationship between humans, digital twins, and avatars. For that reason, let’s analyze the following definitions.

Mirror World

A mirror world is a representation of the real world in digital form. It attempts to map real-world structures in a geographically accurate way. Mirror worlds offer a utilitarian software model of real human environments and their workings.

Mirror world is the term that fits the reality which many companies seek to explore. This comes from understanding the metaverse as a way to explore the unbuilt but within the boundaries of what feels “natural” for us in our daily reality. We will discuss this topic in detail through the example of the Real Estate industry in the application of VR technology.

Digital Twin

A digital twin is a virtual representation that serves as the real-time digital counterpart of a physical object or process.

Avatar (~Meta-Persona)

The definition of an avatar is something visual used to represent non-visual concepts or ideas or an image used to represent a person in the virtual world of the Internet and computers. A 3D avatar is a digital persona, a replica of yourself that you can create to represent yourself on the internet.

It is worth mentioning that avatar and digital twin are not equivalent definitions. An avatar is the graphical representation of our digital persona but doesn’t carry the identity itself. It is through the interaction of the human that the avatar becomes the graphical representation of the digital twin.

These definitions may bring more light to the concepts behind the metaverse, but we consider them insufficient to prove the worth of the metaverse for presenters. Therefore, to explore the possibilities the metaverse could offer to our niche, we approached this research by putting ourselves in the shoes of an individual, seeking to adapt their daily work routine to the metaverse.

Explanation of the research methodology

We designed the research approach with three objectives: a) Understand the metaverse through the eyes of current developers, b) Understand the experiences and capabilities offered by current metaverse implementations, and c) Reimagine the world of presentations (one to many communication scenarios) within the metaverse scope.

In order to pursue these goals, the activities involved: i) Study the current state-of-the-art of the metaverse, ii) Invest in technology and setup of a metaverse experience, iii) Create virtual experiences and evaluate their potential in the scope of one to many content deliveries; iv) Finally create a virtual experience with people outside the R&D team, and evaluate the process and outcome.

Our initial research found there are multiple software solutions to interact with the metaverse:

- Horizon Workrooms (by Meta)

- Rove (by Zoom)

- Spatial

- Immersed

- AltspaceVR (by Microsoft)

- Decentraland

- Sandbox

- Cryptovoxels

- Mona

- LivingCities

Still, not all the platforms were tailored for the kind of experience we intended. Intersecting our interests with the capabilities offered by those platforms, we came up with a shortlist of four different venues that were able to host metaverse meetings for business professionals:

- AltspaceVR

- Horizon Workrooms

- Rove

- Spatial

In order to unleash the capabilities for metaverse meetings, we acquired a Meta Quest 2 headset unit, thanks to which we tested if it was possible to create work environments to host our meetings, the graphics quality for corporate meetings, as well as performance insights. After that, the R&D team was presented with the challenge to deploy the acquired knowledge and host the environment for one of the regular SlideModel board meetings.

State-of-the-Art: Potential Applications of the Metaverse in our days

The hype built around the metaverse concept can lead us to believe its application is strictly linked to the gaming industry and Web 3.0 technologies. During our research, we found an immense amount of resources that spoke about the seriousness behind the current status of the metaverse.

For example, during the 2018 L.E.A.P. conference, the concept of “Magicverse” was introduced to expose the theories of how spatial computing will manifest in the close-by future. Magicverse interprets the existence of datasets arranged layer-by-layer over the digital world, complementing usages and applications to the current physical world is limited. The concept is also strictly linked to the technological developments in 5G networking and its application in building smart cities.

We consider that AR is shaping new behavioral patterns in consumers, especially for the younger audience, and this is a line of thought that several Silicon Valley companies are committed to exploring. Companies like Facebook went as far as to rebrand themselves (now Meta) to reflect this new take on technology. This is a statement of how technology development reflects on the mindset of business entrepreneurs, even when the full range of applications is still not apt for the effort required, such as with VR advertising.

At this point, we believe the metaverse is an ecosystem of isolated technologies that tend to work somewhat interconnectedly. This may seem vague, but the reality is no one has a direct answer to the outcome of the metaverse. It feels like predicting the direction of the internet in a broad spectrum of possibilities; hence, the take regarding metaverse applications remains a matter of deep philosophical questioning.

The race to conquer the metaverse has involved the main four tech giants, with Meta setting the trends for tomorrow in technology and software. In a later section, we will mention some exciting applications of Meta in shaping the education of tomorrow. Google’s approach to the metaverse comes from deep research and simultaneous projects like Project Iris and Project Starline, yet its potential application remains linked to the Google Glass technology. The recent acquisition of MicroLED Startup Raxium can lead us to believe they intend to dwell in scientific or medical applications for the metaverse rather than just sticking to media and advertisement.

On the other hand, Amazon remains somewhat silent compared to its tech competitors in terms of metaverse research. Currently discreetly recruiting personnel, the expectation is to see an integration of the AWS technology with their broad range of smart home solutions – the latest, Amazon Astro, a household monitoring robot about the size of a vacuum machine, fully integrated with Alexa.

Microsoft invested efforts in both hardware and software when the metaverse race started. The development of the HoloLens headset and AltspaceVR comes backed by expertise in the gaming industry with the Xbox gaming platforms. An integration between Microsoft Teams and the metaverse is bound to happen with the introduction of Mesh for Microsoft Teams. But we cannot neglect an important aspect of Microsoft’s technology development: their capabilities to build powerful simulation tools like the world-renowned Microsoft Flight Simulator. It would be interesting to see future applications of the HoloLens headset and the manufacturing industry since companies like Mercedes Benz, Audi, Toyota, and EATON, among others, started to lean towards industry 4.0 manufacturing thanks to it.

In the following section, we will expose two of what we believe are metaverse applications in the current technological stage.

Exploring the unbuilt: Real Estate Investments and the Metaverse

The real estate industry sought the opportunity to reduce expenses in what’s called the “live experience.” Apartment units were introduced to potential buyers by building an entire unit during construction, ready to “be lived” by a family. Realtors had an extra asset for convincing users that they were potential. But COVID-19 happened.

Restrictions imposed by the pandemic affected many industries, and real estate was no exception. Then, realistic 3D videos started to increase in demand, although the experience wasn’t as close as “living” the space in “open house” scenarios. The ArchViz industry had developed multiple pathways to hyperrealism through the advances in their software offer: GPU-rendering, cloud-based collaborations, revamps of rendering engines for realistic texture and lighting results, etc. The answer to satisfying the real-life experience with 3D graphics came by the hand of a tool used mainly for the gaming industry: Unreal Engine.

Thanks to UE4, 3D artists could bring highly-detailed 3D models created in software like Autodesk 3ds Max and not only use real-world textures but also make the experience interactive. Users could walk, jump, run, change their point of view, touch the furniture (as when opening kitchen cabinets), or sometimes even adjust the finish material to their taste.

Before you consider this a fancy experience, imagine building entire cities of that degree of quality and populating them with unbuilt real estate projects. That’s when investors jump into this wagon, as they are experiencing first-hand what their capital will look like after being built. The best part of these digital experiences is that users don’t need VR headsets, as UE4 and UE5 allow a cloud-based experience combined with high-end design computers/servers to hold the presentation in action.

Education in the Metaverse: the VictoryXR experience

Experience tells us that students experience difficulties in comprehending abstract topics in specific disciplines, such as science-related subjects. The metaverse for education is one exciting venture to explore, regardless of referring to actual students in graduate courses or geared towards academic research.

Founded in 2016, VictoryXR is bringing education closer to students thanks to the usage of VR and AR. Thanks to the inception of the pandemic, Zoom became the tool for educators to stay in touch with students and not freeze their academic growth for nearly two years. This experience led many to question the potential applications of AR and VR technology in education, and this is where VictoryXR steps in. Biology students at Morehouse College, USA, studied cell composition in detail, explored the human heart and interacted with molecules thanks to VR headsets.

Another exciting application is made by the University of Maryland Global Campus, an online-only school that takes after the MIT edX experience. Their introductory biology and astronomy courses are due to integrating the usage of Meta Quest 2 headsets in a partnership between UMGC, Meta, and VictoryXR. Thanks to this application, traditional education experiences such as dissections in biology classes will be replaced by VR experiences, an interesting take for animal rights advocates. The entire VR education experience is part of Meta’s Immersive Learning project, and the results will be tracked to quantify the value obtained in comparison with traditional education methods.

We can imagine multiple applications of the metaverse in academic/education environments. For civil engineering students, the capabilities of metaverse presentations can help build spatial awareness and a better understanding of topics like tensile-structure design; moreover, it becomes a visually appealing method to showcase the findings for a final term evaluation project.

Another alternative comes from the metaverse’s capabilities to specific careers in training stages, such as electricity workers. Setting up detailed representations of real-world buildings and their machinery allows future electricity workers to be coached, thanks to their digital twins taking action, into the tasks involved in their job without any risk. Thanks to this approach, people can face their daily job situations with a broader knowledge background, not risking the chance of life-threatening accidents by miss manipulating machinery. The aviation industry has taken a similar approach by using flight simulators for students. Simulators help them acquire fluency in radio aviation communications, practice flying skills within a safe environment and reduce costs, or even test dangerous flying conditions to instruct students on the required protocol.

The case of Decentraland: Where luxury meets the metaverse

In the previous two points, we explored practical applications of the metaverse thanks to the usage of current AR and VR technologies. But what if we talk about living the whole digital experience, an entirely digital world? Decentraland is a metaverse platform built on the concept of “buying” digital parcels, a process required to create environments. After a space is created, users can freely roam and participate in events.

Recently, Decentraland gathered the interest of brands like Cartier, Nike, and others for their future events. Nike’s initial incursion to the metaverse happened in 2019, with the release of Nikeland in Roblox. Verizon Communications Inc. tested the capabilities of the digital world through gaming platforms to raise brand awareness.

One interesting application case of Decentreland comes from the danish architecture studio Bjarke Ingels Group (commonly known as BIG), in which they designed the virtual office for Vice Media Group in Decentraland.

Is Decentraland worth the investment? It drives hype to brands as they become part of an exclusive network. It can be replaced by any other platform, except for the limited number of spectators allowed in an event.

Hardware Solutions for Metaverse Meetings

The metaverse implementations are designed around the use of 3D experience hardware. It is possible to access metaverse spaces with laptops and mobile phones; the experience is nowhere similar to powering up a headset. These technologies incorporate realistic sound experience, hand and eye movement tracking, and spatial understanding. So, which options work for our research purpose?

The best-known headsets available in the market are:

- HoloLens 2

- Meta Quest 2 (Oculus)

Other options include the Sony Playstation VR (mainly intended for gaming), the Valve Index VR Kit, the HTC Vive Pro 2, and the HP Reverb G2. Out of the entire selection, we consider the HoloLens2 and the Meta Quest 2 are the two units that present the best price/quality ratio. Apple previously tried to venture into the AR glasses project; still, it wasn’t welcomed by consumers due to its performance.

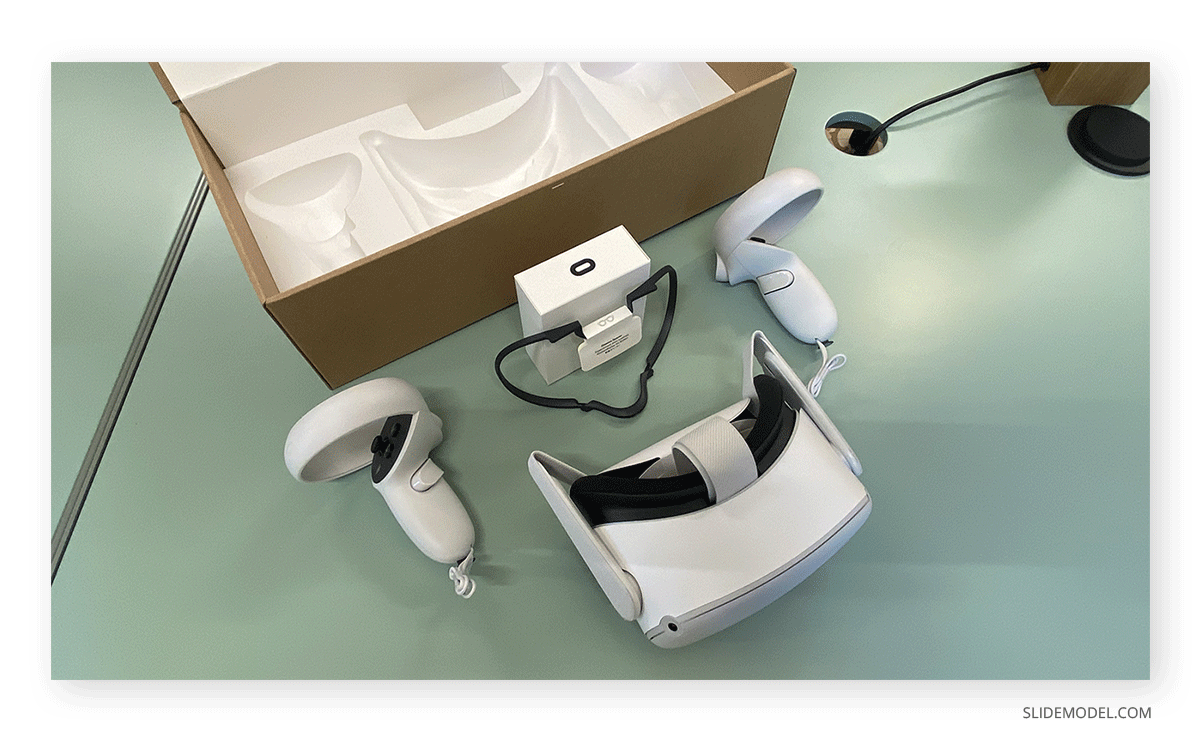

We picked the Meta Quest 2 unit due to its considerably low price compared to the HoloLens 2, and they both feature similar specs. The next section covers the steps we followed during our research to work with the Meta Quest 2 (formerly known as Oculus VR) to start a metaverse meeting.

Meta Quest 2 unboxing

SlideModel acquired its Meta Quest 2 unit via Amazon Spain, being delivered the very next day to Barcelona. You don’t have to bother with complex packaging experiences as the unit is laid on a plastic tray with two hand controllers (known as Touch Controllers), a silicon protector, and a battery charger (much like a phone charger).

Interestingly, there’s an extra accessory included: a mount for people that required to wear eyeglasses.

The battery autonomy of the Meta Quest 2 is estimated at 2 to 3 hours, so charging it between sessions is advisable. After unboxing, it is required to charge the unit.

Our experience starting the Meta Quest 2

First, you need to connect to the internet via your mobile phone and download the Oculus app. The pairing with the headset is handled via mobile, and almost instantly, it requires a login with your Meta account.

After that, you need to create what’s called a “custom VR profile,” in which you set the privacy settings, your interests (“required” for tailored ad experiences), and introduce your payment method. It is a way in which Meta monetizes the apps, as some of them require subscription plans to explore all its functions.

Finally, you need to select your device model (either Quest 2, Quest, Rift/Rift S, or Go) and use the mobile app to install the apps to use.

How we configured the Oculus

We highly recommend you check this documentation by Oculus on how to set up and configure the Oculus unit. With the initial setup done, the headset requires configuring what’s called Guardian. It is a sensor calibration software to define the boundaries in which you are able to move. If you switch between locations, the Oculus will prompt you to start again with Guardian as it only keeps the configuration of one place.

Place yourself in the middle of a room of 2 meters by 2 meters. The area has to be obstacle free, but if your workspace doesn’t meet the requirements, you will need to use the Oculus while seated or standing at one spot with the Stationary Mode enabled.

Creating an Avatar

An avatar, your digital persona, is required for any metaverse application. For some, this may be familiar as many gaming apps need to create avatars, and it is a straightforward process with accurate personalization.

In the video below, we present what was our process to create an avatar with Meta Quest 2 in Spatial.

Tested software solutions for metaverse presentations

As we mentioned in a previous section, the goal set for our R&D team was to deploy a board meeting for SlideModel’s management in the metaverse. These board meetings, usually held in person or via Google Meets, required some essential elements:

- Screensharing capabilities

- Good internet connection

- Face-to-face communication

We tried four different metaverse platforms to host our regular board meetings in the metaverse potentially. The procedure followed to test each of these software solutions was as follows:

- Download the app + create an account

- Creating an avatar

- Configuring the space

- Testing the avatar’s movement in the space

- Testing the user experience movement in the space

- Transitions between spaces

- Adding third-party objects to the scenes

- Projecting 2D presentations and images in virtual 3D spaces

- Testing the 2D experience for non-VR users

For the tested platforms, we expect to host metaverse meetings in a formal business environment, where users can interact with each other, and use tools like screen sharing or note taking.

AltspaceVR

Update 2024: At this time, AltspaceVR has been shut down by Microsoft.

AltspaceVR was a platform built by Microsoft to host events in the metaverse. Free of charge, you needed a headset to fully experience this platform’s performance. Otherwise, a 2D view mode requires 8GB of RAM, a 6th-gen Intel i3 processor or newer, and Windows 8 onwards.

The first advantage that AltspaceVR offered was its public calendar of events, which can help users connect with conferences and similar events. You could also subscribe to channels according to your interests, getting notifications whenever the channel goes live.

Hosting meetings in the metaverse with AltspaceVR already came with pre-built VR environments. These were known as “Altspace Worlds” and resemble – with a very gamish-style – typical meeting places such as libraries, pubs, streets, and even fantasy places.

Our experience with AltspaceVR didn’t meet our expectations. For starters, we felt the graphics don’t look realistic for a professional environment. It brings a reminiscence of Roblox or Minecraft, making the user feel like taking part in a videogame instead of a professional meeting.

The transitions between spaces projected a loading screen, which required downloading the world content for that space. We tried to switch between locations, which wasn’t entirely possible; therefore, we had to join a different event instead of navigating through multiple scenes to change locations.

Horizon Workrooms

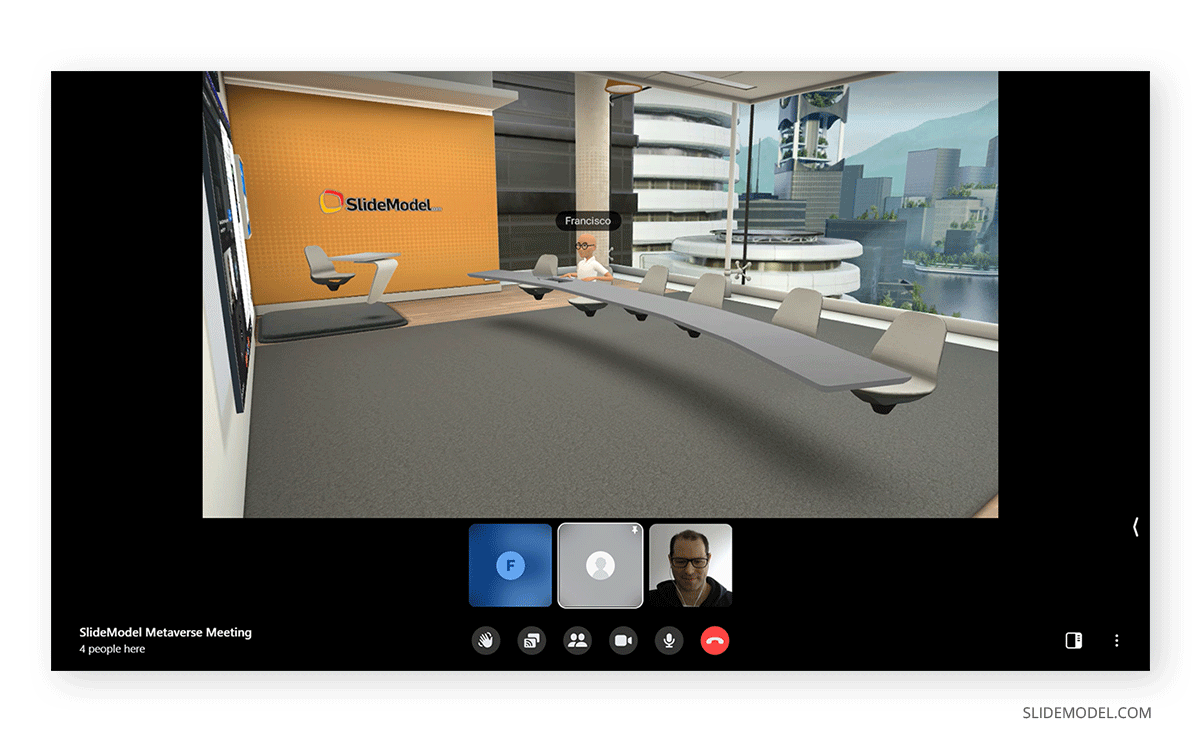

One of the platforms we will showcase in the case study section is Horizon Workrooms, built by Meta. Given its native compatibility with the Meta Quest 2, it had a solid performance for hosting a meeting. It is available via the Desktop app (not compatible with Safari, best compatibility rate with Google Chrome) or the Meta Quest 2 app.

We tested two levels of experience with Horizon Workrooms: the first stage is as a spectator via the Desktop app. It only allows interacting with the other users via a screen, where you don’t get gestures and cannot move in the space. The second stage uses Meta Quest 2, in which Horizon Workrooms prompt you to build an avatar, and you can access all the gestures (after configuring them using the touch controllers).

Room customization is only possible if you use the headset. You need to upload your company’s posters (landscape or portrait orientation) and the logo. Landscape and Portrait formats support JPG, JPEG, or PNG format, with a minimum size requirement of 172 x 121 pixels and less than 4MB. For the logo, a transparent background PNG file, bigger than 720 x 405 px, and the maximum size is 4MB. Upload the files to your computer via the Oculus app, and you can then update the space using the headset.

To have a really immersive experience, you need to download the Oculus Remote Desktop app in order to share your computer’s screen. This topic will be covered in detail in our Case Study section for Horizon Workrooms.

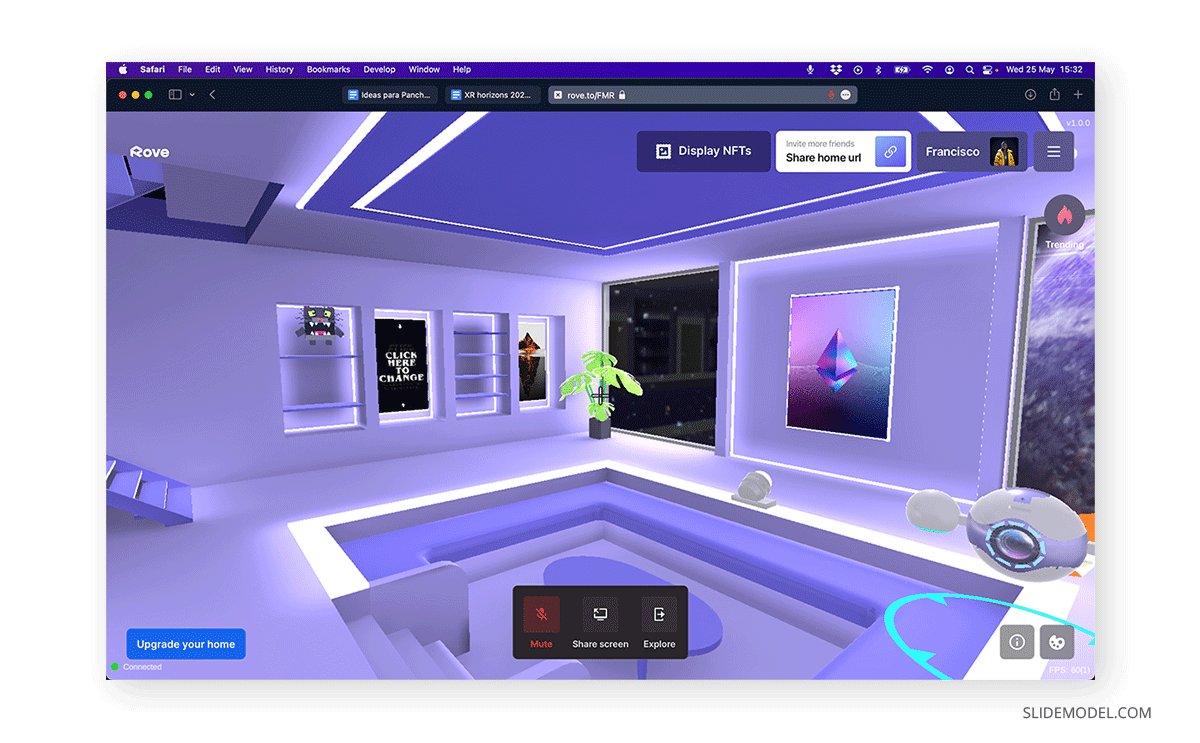

Rove

Rove, by Zoom, is another platform to connect via the metaverse for online meetings, allowing users to customize the space. Much like what happened with AltspaceVR, in our opinion, the graphics look outdated and cartoonish. The aesthetic, we feel, is inspired by gaming platforms like Roblox.

Rove aims to become a network of 3D websites where the e-commerce experience can become tangible for users. That’s the reason why you can find integrations for Shopify, WordPress, Behance, Slack, and even Reddit. Users can build entire rooms to host their 3D website shops without coding knowledge, and since the whole space is customizable, it is an ideal platform to exhibit NFT art.

According to Rove, the limitations of this platform allow 10 users to interact simultaneously with speaking permissions, whereas to attend an event, there’s a limit of 30 users in the same space.

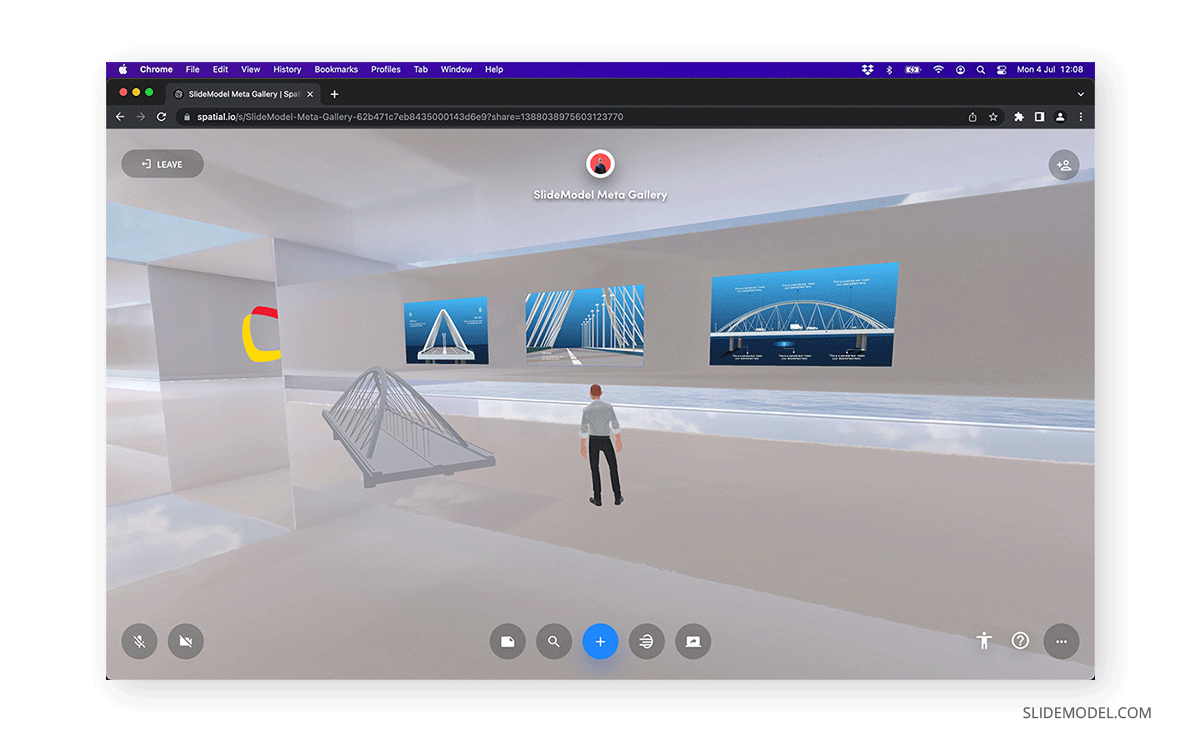

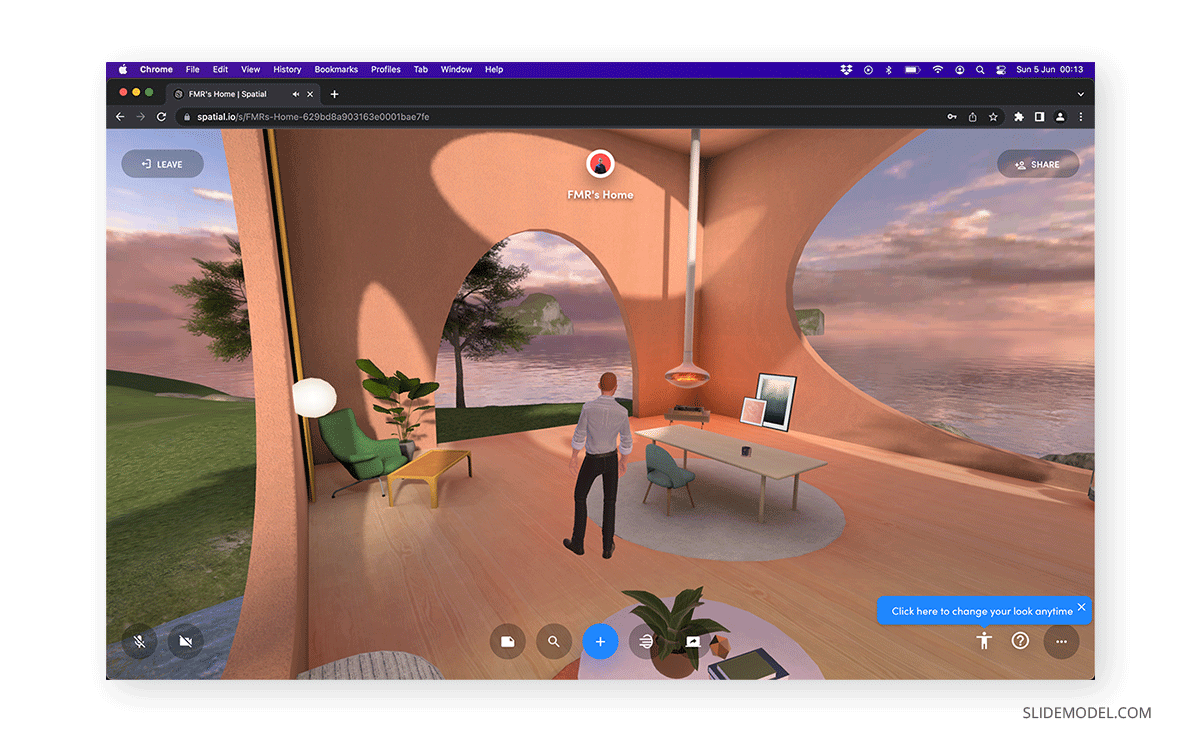

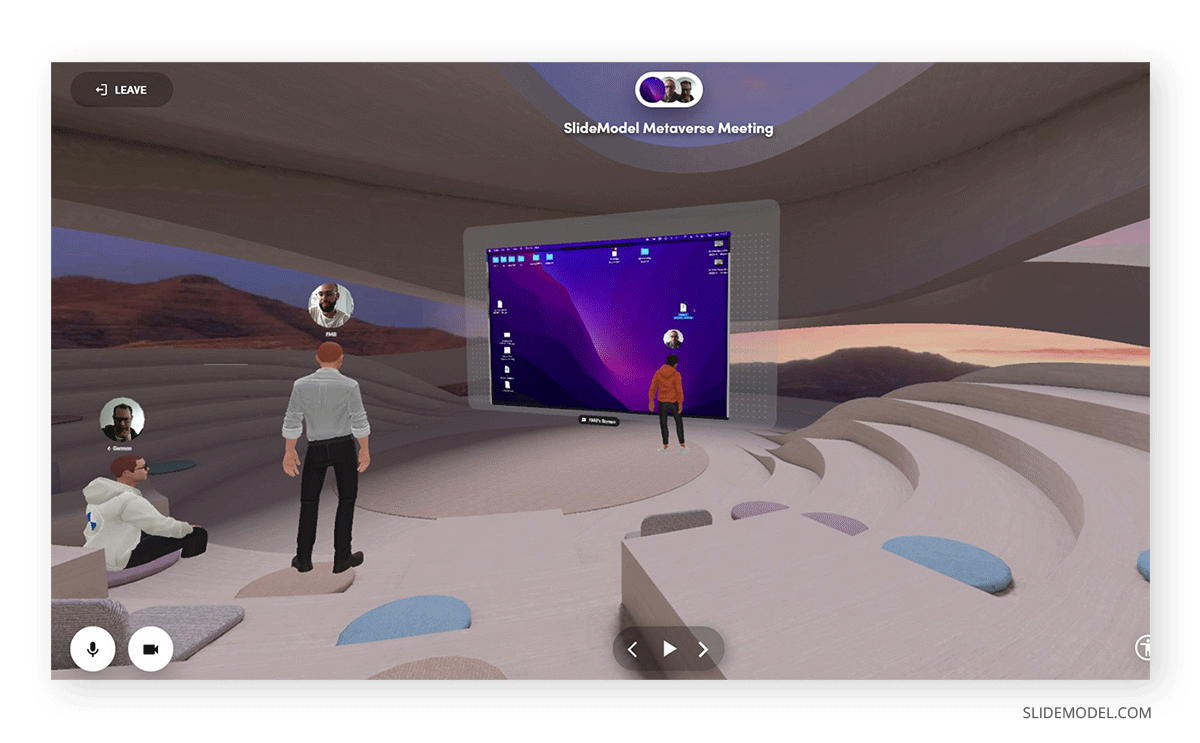

Spatial

Spatial is a platform that truly impressed us in terms of performance and graphics. It is the platform we tested the most and will be explained in more detail in our case study section. By far the best graphics quality from the current metaverse platform pool, and it’s targeted to events. Again, you can experience this in 2D mode via the desktop app or fully immersive VR with the headset.

The purpose of Spatial is to create ambiances that can host formal or informal events, allowing you to upload your 3D models to the space and interact with them. We can imagine classrooms or conferences hosted via Spatial, where users can touch and engage with an object being introduced (much like the example above about a product release). There’s also the alternative to using Spatial as a realistic 3D art gallery, a feature that NFT artists exploited.

For iOS users, there’s an amazing application through the Spatial app, and that is the LiDAR Scan. This feature is only compatible with iPhone 12 Pro onwards or iPad Pro 2nd gen onwards. Using the TrueDepth sensor, we can 3D scan spaces to generate entire digital versions of the ambient we live in. For this technique, it is best to move the mobile device slowly around the scene, as it helps to capture the mesh in detail.

Unlike other platforms, users can fully customize the room for the meeting. When importing 3D objects, the touch controllers act as if moving your hands in real life, which means you can stretch, rotate, or shrink the 3D objects to place them as required. There are two options for placing 3D objects: “Set as Environment” allows us to orient the object at an infinite map on the background, then the scene morphs to make us users walking in that 3D environment. This usage is amazingly helpful as companies can model exact replicas of their buildings and work with them to host metaverse meetings. The second option is “Set as Skybox,” which is intended for HDRI maps. By applying this option, the background plane of your scene changes, making it possible to use realistic textures of cities, beach scenes, landscapes, etc. This unique component brings an immense degree of reality to the Spatial meetings.

One of the perks that Spatial offers is that users without a VR headset can walk around the room by using the arrow keys, the WASD keys (as in gaming situations), or click and drag with the mouse buttons. There are other gestures available, such as jumping (spacebar), clapping (C key), and dancing (1 number key, common keyboard layout).

Even if you are left without the immersive experience, Spatial allows users to view spaces and interact with other users. Creating an account is mandatory if you want to create your custom avatar or in case you have to join a private room. To fully unleash the capabilities of Spatial, a subscription is required.

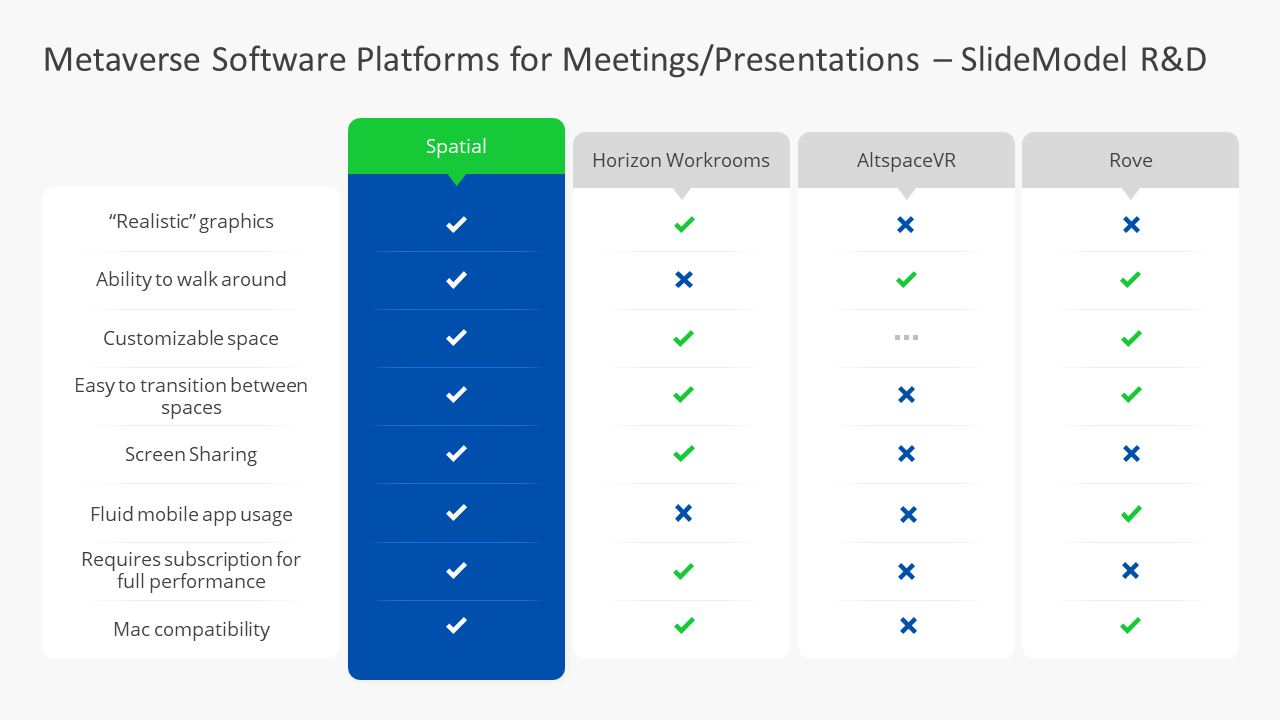

Side-by-side, we can conclude that Spatial and Horizon Workrooms are the best platforms to host metaverse meetings. We invite you to compare the features in the chart below.

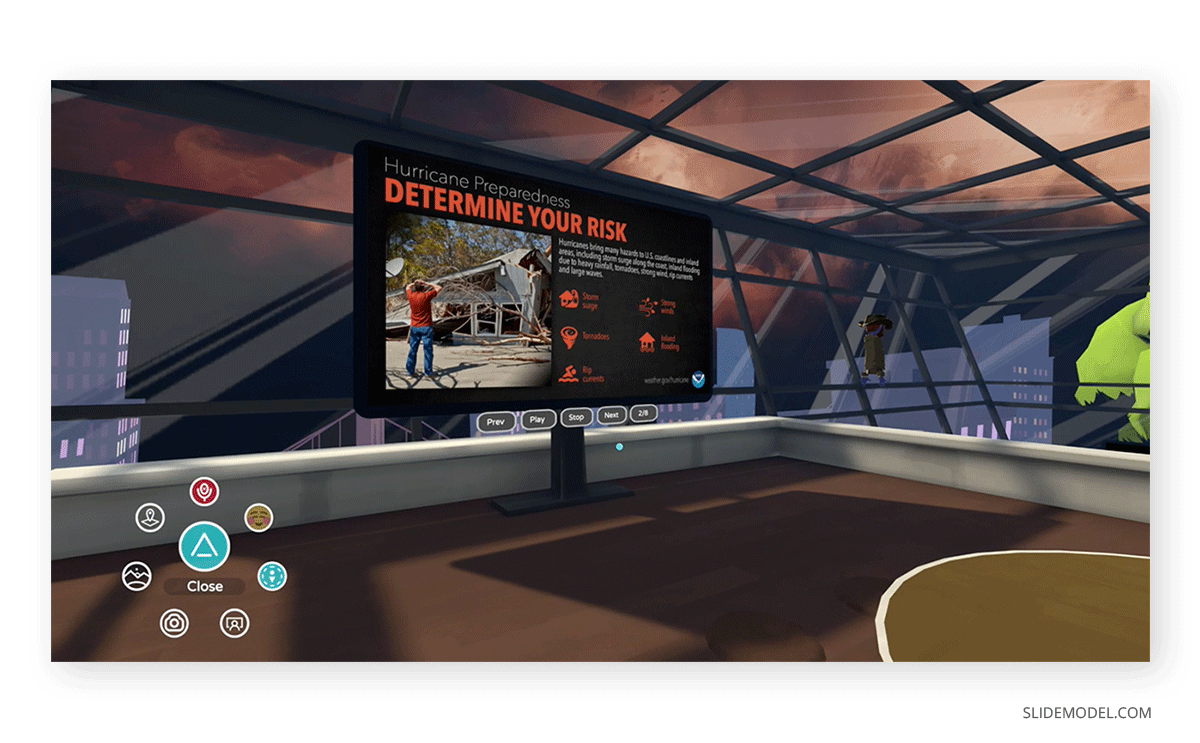

Case Study: SlideModel hosting a meeting in the metaverse

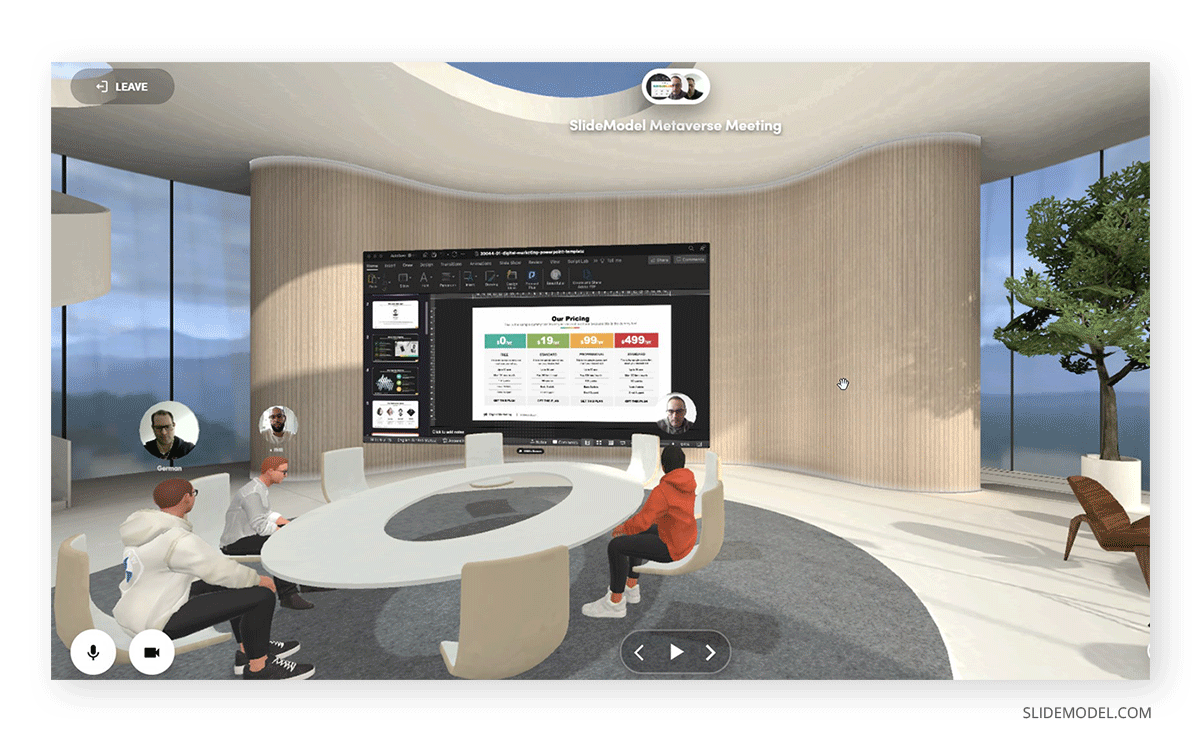

As part of our research process, SlideModel hosted an entirely virtual meeting in the metaverse between our team members. In this section, we will tell the story of how that process happened using both Spatial and Horizon Workrooms.

Connectivity process & common issues

As we were testing only one Meta Quest 2 unit, the meeting comprised the head of R&D using the VR headset, while others attended via the 2D method through the desktop app.

It’s worth mentioning these kinds of meetings have a steep learning curve. During our experience, we felt the effort of preparing a meeting in the metaverse nowadays is comparable to at least three times what a typical meeting would take. All users had to configure their Horizon Workrooms profiles, and as not every user had gadgets, access to functionality was limited. In the case of Spatial, you need to configure an account to be able to create an Avatar. If the meeting is hosted in a private room, as we did, then the account is a must; in public meetings, you can join with a random avatar without creating an account.

For new users with VR headsets, it’s necessary to configure the avatar, which can be exasperating for under-the-clock meetings. Also, calibrating the workspace with the touch controllers and preparing the Oculus Remote Desktop app takes considerable time. Connectivity issues could be experienced if the WiFi range is not stable enough. We should mention that Spatial allows us to screen share via the desktop app and only using Google Chrome.

Is gear 100% required for metaverse meetings?

Horizon Workrooms

The short answer is yes. Everything is seen from afar for users attending metaverse meetings via the desktop app. It feels like watching a video from YouTube rather than following a meeting, even more detached than what Zoom could be perceived.

Although some functions require the Oculus Remote App, the truth is that users cannot interact with the scenario unless they use the headset, and the admin permissions are linked to at least one user wearing the headset. Also, the room only shows people with VR headsets as avatars sitting; this means that users in the 2D experience don’t appear in the graphics, as if they weren’t part of the meeting.

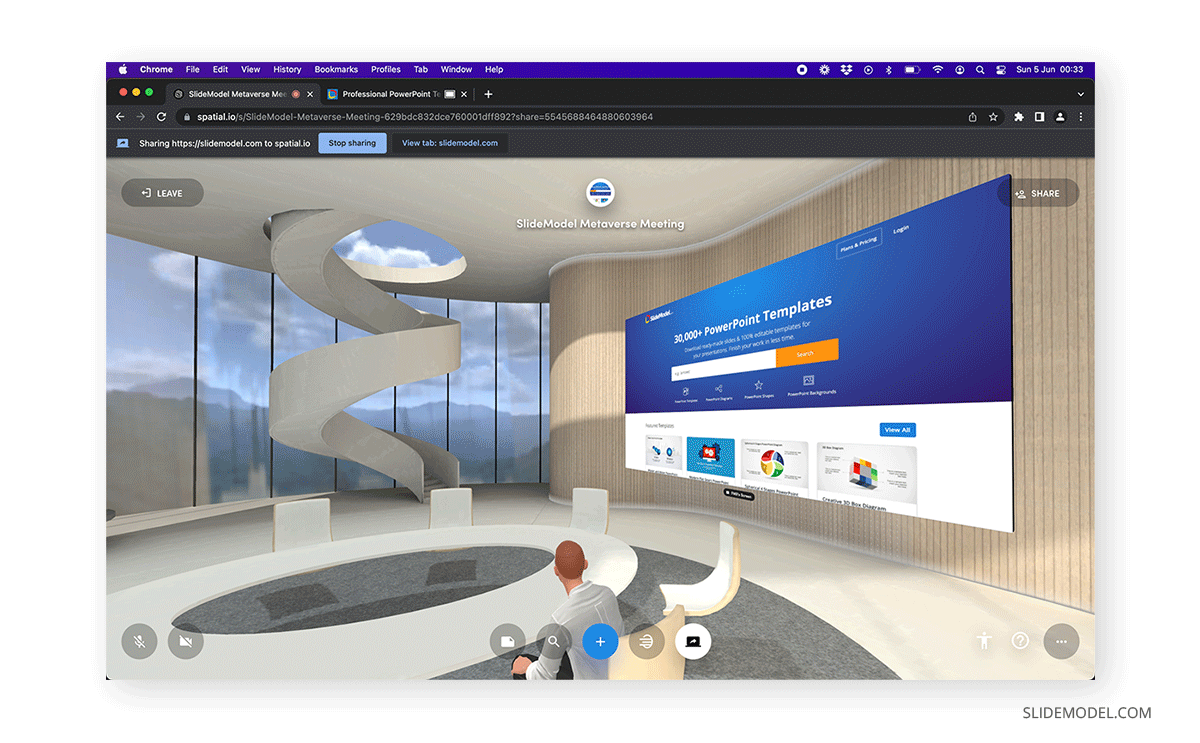

Spatial

Thankfully Spatial takes into consideration the fact not all users own VR Headsets. Unlike what happens with Horizon Workrooms, the 2D experience in Spatial resembles a first-person videogame. The movement is fluid, although it takes time to get used to walking around the scene. Interacting with elements or other users is possible, but some features are limited to the VR experience.

How using a headset changes the experiences

Horizon Workrooms

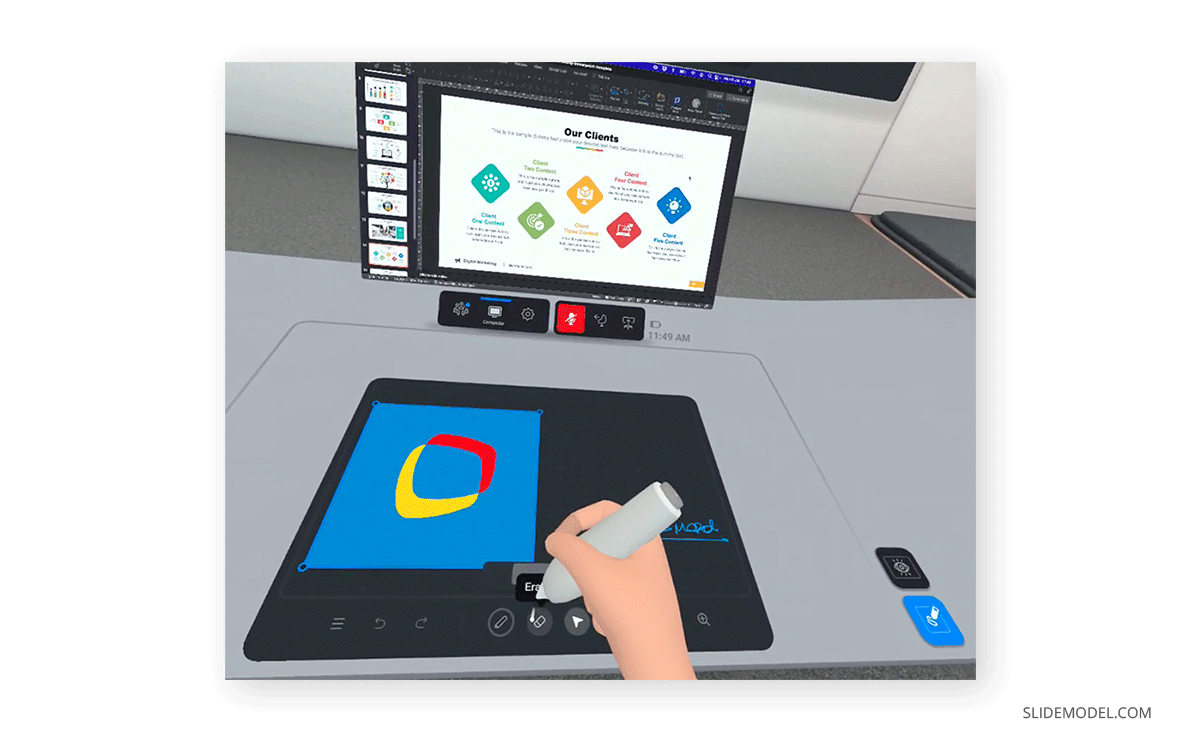

Unlike what we have seen in demo reels about Horizon Workrooms, you can only attend and interact with elements in the room while seated. We believe this will likely change in the close-by future as software gets upgraded. Since nothing is pre-configured for you, you should take extra time to configure the workspace around you. For example, the whiteboard gestures have to be configured, and despite taking a considerable time, it is 100% worth the effort.

The desk area in front of your seating place accurately represents what you have in the real world. It translated a digital version of our Macbook laptop with the headset. After syncing the Oculus Remote App, the screen-sharing capabilities became as fluid as in real life.

Spatial

Using the VR headset in Spatial is a real game-changer. Not only can you customize the space with freedom, particularly when importing and resizing 3D objects, but also Spatial allows some interesting features – for example, taking a selfie with fellow members in the meeting.

Hand gestures are used to their current maximum potential, and we can see it in the form of note-taking, interacting with 3D models, or touching other users to greet them.

Again, this requires some training as touch controllers are not exactly user-friendly. You get used after practice, so it is not ideal for new users in professional meeting scenarios.

How to prepare the virtual office

Horizon Workrooms

Currently, Horizon Workrooms only allows tweaking minor elements of the office design. You start with a pre-built office template, in which you can upload the logo image and then alter the landscape and poster photos.

Although it may seem simple, the process is genuinely time-demanding, and presenters need to consider this between the variables for time management.

Spatial

The simplest way to define the office configuration in Spatial is “absolute freedom.” GLB/GLTF are the recommended file formats for 3D objects, in particular, GLTF, due to the usage of JSON to minimize the size of the 3D asset. If you don’t know how to export these formats, you can import 3D models in FBX or OBJ, which are native export formats for 3D modeling apps such as Autodesk 3ds Max, Blender, Trimble Sketchup, and more. We recommend checking the Spatial 3D Model Preparation Guide before loading an object.

For the seasoned 3D artist, this is not a complex process, but for a common user, it can be somewhat confusing and require extra assistance. The best part of this Spatial experience is that you can upload several models – say one to shape the 3D environment, and then extra 3D assets to interact with them (for example, 3D versions of products to release).

In case you are using LiDAR to build your virtual office, be sure to take some extra time prior to the meeting, as you ought to move slowly to get accurate mesh results. The scene has to be rendered after scanning, then it refreshes for usage.

Keep in mind you can also work with pre-built scenes to save time and focus only on the meeting’s content.

How to use PowerPoint templates in the Metaverse

The usage of PowerPoint templates in the metaverse is one of the theories that drove us to this research process. At this very technological point, what you do is to screen-share what your computer is displaying, hence the importance of installing the Oculus Remote App.

Transitioning between slides feels comfortable if your computer has enough resources to manage the experience. For tablets and smartphones, we don’t consider it advisable as only recent flagship devices can handle the requirements of this metaverse presentation experience. That’s not only due to processor speed but also RAM performance. Consider using mobile devices with 6 GB of RAM or more to get a fluid experience, and best to opt for Spatial than Horizon Workrooms if you plan to attend from a mobile device.

Thanks to the touch controllers, users can apply gestures to discuss the topics explained in the slides, even gesture as you change the slides. It’s worth noting that practicing fluid gestures requires some practice with the headset and touch controllers. Also, the experience can make you feel somewhat dizzy at first due to adjusting to the immersive experience.

If you need a quick method to create a presentation, check out our AI presentation maker. A tool in which you add the topic, curate the outline, select a design, and let AI do the work for you.

Translating the current real-world presentations into the metaverse

Previously we discussed what it was like to host a meeting in the metaverse, but what does it really mean to translate the entire process of a meeting in terms of effort? Is it worth exploring this venture considering the steep learning curve? We will explore this topic in the sections below.

Interacting with the metaverse

In real-life presentations, the presenter is aware of people asking questions, their genuine interest in the topic, the reception that the message is getting, and the list goes on. This is thanks to our awareness of body language and emotional intelligence to be susceptible enough when a presentation is going awry. In the metaverse, we currently lose that stage in the actual state of technology.

The usage of avatars conditions a presenter’s reception from the audience since avatars won’t reflect the user’s body language except for hand gestures. This is a considerable limitation if we seek to cultivate quality presentation skills through experience.

Another limitation we found is the pacing for interactions between users as, given the internet connection quality, users can suffer from lag. This point affects a fluid conversation as you would typically have in a conventional meeting.

Hardware requirements to host presentations in the metaverse

As we mentioned before, not every computer can host meetings in the metaverse, and the best outcome is given through VR headsets. Take into consideration counting with a computer no older than 2016, with a minimum of 8GB of RAM (preferably 16GB), a dedicated graphics card, Intel Core i3 or equivalent, and at least 250GB of storage.

We mention these specs as the desktop experience is handled via Google Chrome mostly in both Spatial and Horizon Workrooms. Chrome is like a resource vampire for interactive applications, and it will feed on your RAM. Hence, if you don’t want to experience any delays or freezing screens, your hardware has to be up to date. Spatial actively recommends using Google Chrome for some of its features, like audio and video streaming.

For a truly immersive metaverse experience, the headset is a must. Multiply that by the number of users likely to attend the presentation, and it’s a hefty sum from a hardware stance. Laser pointers won’t work for metaverse meetings – you need to learn how to replicate such behavior with the touch controllers. And on top of this, you need to recalibrate your VR headset each time you switch between locations.

Obstacles found in the Metaverse Meeting Experience

In our experience, the metaverse meetings are not user-friendly at this present time. By no means. First, if the users aren’t tech-savvy, many obstacles are bound to happen when interacting with the VR headset. If that’s not the case, then issues arise when switching between slides or screen sharing from different computers. To allow screen sharing features, all users must wear VR headsets, as 2D spectators don’t have the privilege to share screens during metaverse meetings (nor can they be hosts for these spaces).

Keep in mind your phone won’t be compatible with this technology, so you cannot use your phone as a comfortable option to switch between slides. This represented a delay in changing between slides as you need to maneuver your way with the touch commands (or install the desktop app on your computer).

Another obstacle we found is that metaverse meetings seriously resemble video gaming environments. As a generational gap, users that don’t belong to the centennial generation may find it unprofessional. Again, we believe these obstacles are prone to be overcome as technology develops for the metaverse.

Elements we consider could improve the user experience

The first element that comes to mind is the lack of IS (image stabilization). As you can see from our recorded meetings, the point of view constantly shifts without any stabilization software being applied. This is a real problem for users who intend to read data from PowerPoint presentations, as it can wear your eyesight or make you feel dizzy.

The graphical aspect has to elevate its game past cartoonish avatars to real-world corporate environments – at least if that’s the aim of introducing presentations to the metaverse.

We cannot say much about the hardware requirements as this is where we stand. It’s costly for most companies – and not precisely ROI-minded unless they get significant perks from this experience.

When using a laptop and getting it detected via the headset, we also have to configure the screen performance, as default settings display only the room’s configuration. Overall, the mirror screen feature is not as fluid as you could have on a Google Meet call.

Conclusion: Are presentations in the metaverse worth the effort these days?

In this current state of technology, the metaverse is still in process. It’s amazingly time-demanding to build the 3D environment for a presentation and even worse to coach people in the methodology to follow.

Since the metaverse is like greener pastures for the presentation world, we believe there shall be development stages to bring it closer to users. Although, there’s no clear guidance on when that would happen, by whom, and what the “new” experience will be.

Creating presentations in the metaverse these days should be intended for people that wish to prepare NFT auctions or those with specific training requirements, as we have seen in the educational applications. Since the metaverse helps to bridge the gap between the digital world and real-life experiences, it can put hazardous experiences aside in training sessions. From a resource perspective, the applications of the metaverse in education reduce the investment in scarce resources; for example, when working with dissections in biology lessons or hard-to-find chemical compounds for science classes.

For the common user who seeks to elevate the experience of its presentations, we feel the effort involved in creating the room, coaching the attendees, and preparing the meeting itself cannot be capitalized on the overall performance. Again, these are our conclusions based on the current technological state of the metaverse, and the situation can quickly change in the future.

We hope this article brings light to the topic of presenting in the metaverse, and we will see you next time.